01. 개요

Kubernetes-operator

- https://kubernetes.io/ko/docs/concepts/extend-kubernetes/operator/

Operator는 특정 애플리케이션 컨트롤러 인 Kubernetes API를 확장하기 위해 회사에서 개발 한 CoreOS에 의해 생성됩니다 .

데이터베이스, 캐싱 및 모니터링 시스템과 같은 복잡한 상태 저장 애플리케이션을 생성, 구성 및 관리하는 데 사용됩니다.

Operator는 Kubernetes의 리소스 및 컨트롤러 개념을 기반으로하지만 일부 애플리케이션 별 전문 지식도 포함합니다.

예를 들어 데이터베이스 운영자를 생성하려면 생성 된 데이터베이스의 다양한 운영 및 유지 관리 방법에 매우 익숙해야합니다.

Operator를 만드는 열쇠는 CRD (custom resources)의 디자인입니다.

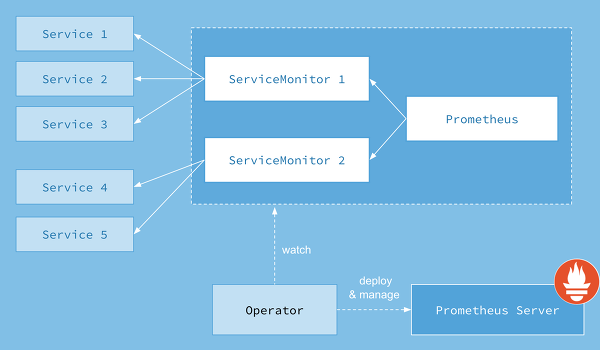

ServiceMonitor

- ServiceMonitor란 CRD(CustomResourceDefinition) 방식으로 Operator (코어OS 최초 시작)를 이용하여 Metric을 관리하는 방법 입니다.

- Metric 추가 작업 시 prometheus.yml 파일에 manual하게 추가하는 것이 아니고 별도의 Kubernetes Object Servicemonitor (마치 configmap, deployment 처럼)를 이용합니다.

- https://www.fatalerrors.org/a/rapid-deployment-of-kubernetes-monitoring-system-kube-prometheus.html

Summary

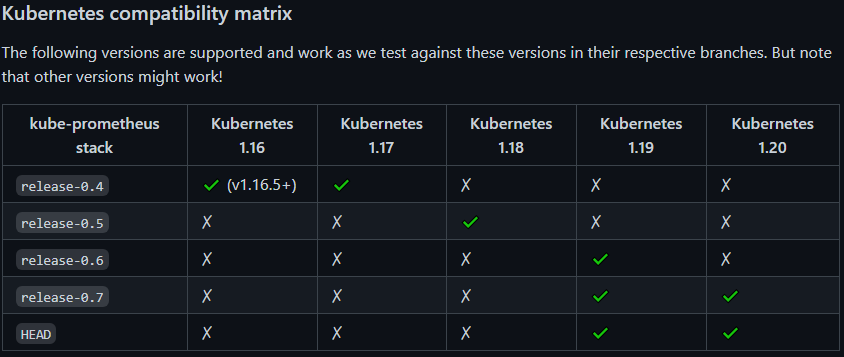

- kube-prometheus-stack collects Kubernetes manifests, Grafana dashboards, and Prometheus rules combined with documentation and scripts to provide easy to operate end-to-end Kubernetes cluster monitoring with Prometheus using the Prometheus Operator.

- Installs the kube-prometheus stack, a collection of Kubernetes manifests, Grafana dashboards, and Prometheus rules combined with documentation and scripts to provide easy to operate end-to-end Kubernetes cluster monitoring with Prometheus using the Prometheus Operator.

Dependencies

By default this chart installs additional, dependent charts:

Guide for kube-prometheus-stack with Helm3.x

02. 사전조건

03. Namespace 생성

sansae@win10pro-worksp:$ kubectl create ns monitor-po

04. Install Chart of kube-prometheus-stack

sansae@win10pro-worksp:$ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

"prometheus-community" already exists with the same configuration, skipping

sansae@win10pro-worksp:$

sansae@win10pro-worksp:$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "dynatrace" chart repository

...Successfully got an update from the "prometheus-community" chart repository

Update Complete. ⎈Happy Helming!⎈

sansae@win10pro-worksp:$ helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack -n monitor-po

NAME: kube-prometheus-stack

LAST DEPLOYED: Wed Mar 31 10:30:38 2021

NAMESPACE: monitor-po

STATUS: deployed

REVISION: 1

NOTES:

kube-prometheus-stack has been installed. Check its status by running:

kubectl --namespace monitor-po get pods -l "release=kube-prometheus-stack"

Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.

sansae@win10pro-worksp:$ kubectl get all -n monitor-po

NAME READY STATUS RESTARTS AGE

pod/alertmanager-kube-prometheus-stack-alertmanager-0 2/2 Running 0 91s

pod/kube-prometheus-stack-grafana-6b5c8fd86c-lwcv2 2/2 Running 0 93s

pod/kube-prometheus-stack-kube-state-metrics-7877f4cc7c-b2nnc 1/1 Running 0 93s

pod/kube-prometheus-stack-operator-5859b9c949-4n24x 1/1 Running 0 93s

pod/kube-prometheus-stack-prometheus-node-exporter-5f4pm 1/1 Running 0 93s

pod/kube-prometheus-stack-prometheus-node-exporter-5fbc7 1/1 Running 0 93s

pod/kube-prometheus-stack-prometheus-node-exporter-ggj8c 1/1 Running 0 93s

pod/kube-prometheus-stack-prometheus-node-exporter-h5cfj 1/1 Running 0 93s

pod/kube-prometheus-stack-prometheus-node-exporter-hvpsf 1/1 Running 0 93s

pod/kube-prometheus-stack-prometheus-node-exporter-mbt54 1/1 Running 0 93s

pod/kube-prometheus-stack-prometheus-node-exporter-s5zd9 1/1 Running 0 93s

pod/kube-prometheus-stack-prometheus-node-exporter-v7bsj 1/1 Running 0 93s

pod/kube-prometheus-stack-prometheus-node-exporter-v7sts 1/1 Running 0 93s

pod/kube-prometheus-stack-prometheus-node-exporter-vnmx5 1/1 Running 0 93s

pod/prometheus-kube-prometheus-stack-prometheus-0 2/2 Running 1 91s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

AGE

service/alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 91s

service/kube-prometheus-stack-alertmanager ClusterIP 10.0.92.245 <none> 9093/TCP

93s

service/kube-prometheus-stack-grafana ClusterIP 10.0.240.51 <none> 80/TCP

93s

service/kube-prometheus-stack-kube-state-metrics ClusterIP 10.0.47.252 <none> 8080/TCP

93s

service/kube-prometheus-stack-operator ClusterIP 10.0.215.243 <none> 443/TCP

93s

service/kube-prometheus-stack-prometheus ClusterIP 10.0.152.193 <none> 9090/TCP

93s

service/kube-prometheus-stack-prometheus-node-exporter ClusterIP 10.0.216.169 <none> 9100/TCP

93s

service/prometheus-operated ClusterIP None <none> 9090/TCP

91s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/kube-prometheus-stack-prometheus-node-exporter 10 10 10 10 10 <none> 93s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/kube-prometheus-stack-grafana 1/1 1 1 93s

deployment.apps/kube-prometheus-stack-kube-state-metrics 1/1 1 1 93s

deployment.apps/kube-prometheus-stack-operator 1/1 1 1 93s

NAME DESIRED CURRENT READY AGE

replicaset.apps/kube-prometheus-stack-grafana-6b5c8fd86c 1 1 1 93s

replicaset.apps/kube-prometheus-stack-kube-state-metrics-7877f4cc7c 1 1 1 93s

replicaset.apps/kube-prometheus-stack-operator-5859b9c949 1 1 1 93s

NAME READY AGE

statefulset.apps/alertmanager-kube-prometheus-stack-alertmanager 1/1 91s

statefulset.apps/prometheus-kube-prometheus-stack-prometheus 1/1 91s

sansae@win10pro-worksp:$

05. Volume 설정

05-01. StorageClass 확인

- Prometheus Stacks에서 사용가능한 StorageClass는 AzureDisk이므로 'default 또는 managed-premium'을 사용해야 합니다.

sansae@win10pro-worksp:/workspaces$ kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE azurefile kubernetes.io/azure-file Delete Immediate true 65d azurefile-premium kubernetes.io/azure-file Delete Immediate true 65d default (default) kubernetes.io/azure-disk Delete Immediate true 65d managed kubernetes.io/azure-disk Delete WaitForFirstConsumer true 30d managed-premium kubernetes.io/azure-disk Delete Immediate true 65d

05-02. Prometheus Volume

- Prometheus의 storage에 storageClassName: managed-premium를 추가 합니다.

- storage 사이즈도 프로젝트스펙에 맞게 적절하게 수정 합니다.

sansae@win10pro-worksp:/workspaces$ kubectl get prometheus -n monitor-pga NAME VERSION REPLICAS AGE kube-prometheus-stack-prometheus v2.24.0 1 6d3h sansae@win10pro-worksp:/workspaces$ kubectl edit prometheus kube-prometheus-stack-prometheus -n monitor-po ============================================================= 156 storage: 157 volumeClaimTemplate: 158 spec: 159 accessModes: 160 - ReadWriteOnce 161 resources: 162 requests: 163 storage: 50Gi 164 storageClassName: managed-premium ============================================================= prometheus.monitoring.coreos.com/kube-prometheus-stack-prometheus edited sansae@win10pro-worksp:/workspaces$ kubectl get pvc -n monitor-po NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE prometheus-kube-prometheus-stack-prometheus-db-prometheus-kube-prometheus-stack-prometheus-0 Bound pvc-64f88c7f-6fad-4d66-b6eb-xxxxxxxxx 50Gi RWO managed-premium 6d2h

05-03. Alertmanager Volume

- Alertmanager의 storage에 storageClassName: managed-premium를 추가 합니다.

- storage 사이즈도 프로젝트스펙에 맞게 적절하게 수정 합니다.

sansae@win10pro-worksp:/workspaces$ kubectl get alertmanager -n monitor-po

NAME VERSION REPLICAS AGE

kube-prometheus-stack-alertmanager v0.21.0 1 6d3h

sansae@win10pro-worksp:/workspaces$ kubectl edit alertmanager kube-prometheus-stack-alertmanager -n monitor-po

=============================================================

storage:

volumeClaimTemplate:

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: managed-premium

=============================================================

alertmanager.monitoring.coreos.com/kube-prometheus-stack-alertmanager edited

sansae@win10pro-worksp:/workspaces$ kubectl get pvc -n monitor-po

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

alertmanager-kube-prometheus-stack-alertmanager-db-alertmanager-kube-prometheus-stack-alertmanager-0 Bound pvc-5e7b02eb-7109-417d-9c14-xxxxxxxx 2Gi RWO managed-premium 6d2h

05-04. Grafana Volume

- Grafana는 Prometheus-operator로 구성된 리소스가 아닙니다.

- 따라서, PVC를 수동으로 생성하고, Deployment에 PVC를 사용하도록 수정해 줍니다.

sansae@win10pro-worksp:/workspaces$ vim grafana-pvc.yaml

=============================================================

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pv-claim

labels:

app: grafana

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: managed-premium

=============================================================

sansae@win10pro-worksp:/workspaces$ kubectl create -f grafana-pvc.yaml

create pvc!!!

sansae@win10pro-worksp:/workspaces$ kubectl get deploy -n monitor-pga

NAME READY UP-TO-DATE AVAILABLE AGE

kube-prometheus-stack-grafana 1/1 1 1 6d3h

sansae@win10pro-worksp:/workspaces$ kubectl edit deploy kube-prometheus-stack-grafana -n monitor-po

=============================================================

volumeMounts:

- mountPath: /var/lib/grafana

name: grafana-persistent-storage

-------------------------------------------------------

volumes:

- name: grafana-persistent-storage

persistentVolumeClaim:

claimName: grafana-pv-claim

=============================================================

deployment.apps/kube-prometheus-stack-grafana edited

sansae@win10pro-worksp:/workspaces$ kubectl get pvc -n monitor-po

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

grafana-pv-claim Bound pvc-a13463fa-ebda-4632-a9c0-xxxxxxxx 1Gi RWO managed-premium 6d2h

06. Service Connection

06-01. Prometheus Operated

sansae@win10pro-worksp:$ kubectl get svc -n monitor-po NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 30m kube-prometheus-stack-alertmanager ClusterIP 10.0.92.245 <none> 9093/TCP 30m kube-prometheus-stack-grafana ClusterIP 10.0.240.51 <none> 80/TCP 30m kube-prometheus-stack-kube-state-metrics ClusterIP 10.0.47.252 <none> 8080/TCP 30m kube-prometheus-stack-operator ClusterIP 10.0.215.243 <none> 443/TCP 30m kube-prometheus-stack-prometheus ClusterIP 10.0.152.193 <none> 9090/TCP 30m kube-prometheus-stack-prometheus-node-exporter ClusterIP 10.0.216.169 <none> 9100/TCP 30m prometheus-operated ClusterIP None <none> 9090/TCP 30m sansae@win10pro-worksp:$ kubectl port-forward service/prometheus-operated 9090 -n monitor-po Forwarding from 127.0.0.1:9090 -> 9090 Forwarding from [::1]:9090 -> 9090

06-02. Grafana

sansae@win10pro-worksp:$ kubectl port-forward service/kube-prometheus-stack-grafana 8000:80 -n monitor-po Forwarding from 127.0.0.1:8000 -> 3000 Forwarding from [::1]:8000 -> 3000 Handling connection for 8000

- default user/password is admin/prom-operator

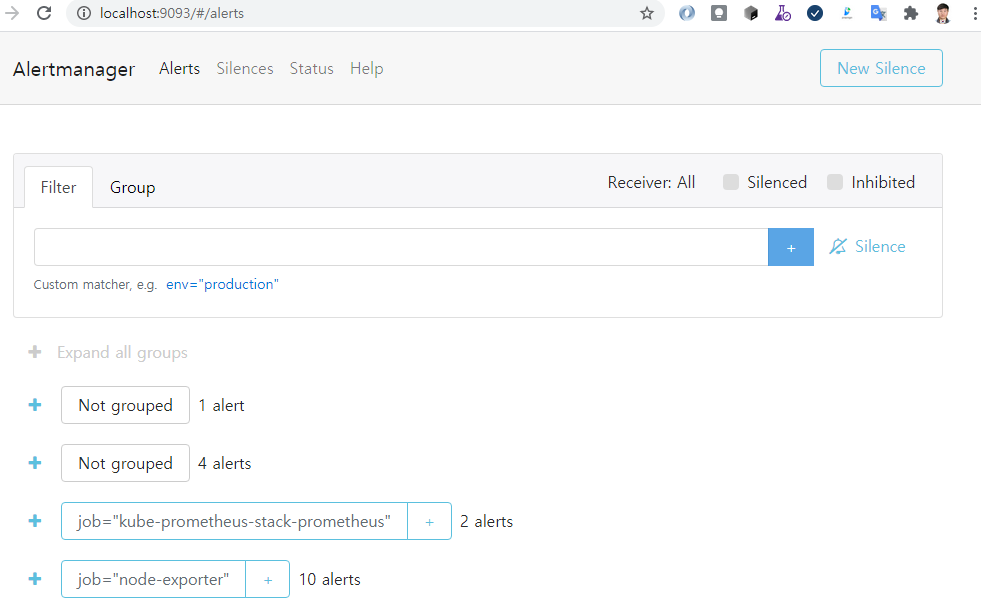

06-03. AlertManager

sansae@win10pro-worksp:$ kubectl port-forward service/kube-prometheus-stack-alertmanager 9093:9093 -n monitor-po Forwarding from 127.0.0.1:9093 -> 9093 Forwarding from [::1]:9093 -> 9093

참고: 삭제할 경우 namespace이외에 삭제 해야할 리소스

for n in $(kubectl get namespaces -o jsonpath={..metadata.name}); do

kubectl delete --all --namespace=$n prometheus,servicemonitor,podmonitor,alertmanager

done

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com

kubectl delete crd alertmanagers.monitoring.coreos.com

kubectl delete crd podmonitors.monitoring.coreos.com

kubectl delete crd probes.monitoring.coreos.com

kubectl delete crd prometheuses.monitoring.coreos.com

kubectl delete crd prometheusrules.monitoring.coreos.com

kubectl delete crd servicemonitors.monitoring.coreos.com

kubectl delete crd thanosrulers.monitoring.coreos.com

kubectl delete clusterrole kube-prometheus-stack-grafana-clusterrole

kubectl delete clusterrole kube-prometheus-stack-kube-state-metrics

kubectl delete clusterrole kube-prometheus-stack-operator

kubectl delete clusterrole kube-prometheus-stack-operator-psp

kubectl delete clusterrole kube-prometheus-stack-prometheus

kubectl delete clusterrole kube-prometheus-stack-prometheus-psp

kubectl delete clusterrole psp-kube-prometheus-stack-kube-state-metrics

kubectl delete clusterrole psp-kube-prometheus-stack-prometheus-node-exporter

kubectl delete clusterrolebinding kube-prometheus-stack-grafana-clusterrolebinding

kubectl delete clusterrolebinding kube-prometheus-stack-kube-state-metrics

kubectl delete clusterrolebinding kube-prometheus-stack-operator

kubectl delete clusterrolebinding kube-prometheus-stack-operator-psp

kubectl delete clusterrolebinding kube-prometheus-stack-prometheus

kubectl delete clusterrolebinding kube-prometheus-stack-prometheus-psp

kubectl delete clusterrolebinding psp-kube-prometheus-stack-kube-state-metrics

kubectl delete clusterrolebinding psp-kube-prometheus-stack-prometheus-node-exporter

kubectl delete svc kube-prometheus-stack-coredns -n kube-system

kubectl delete svc kube-prometheus-stack-kube-controller-manager -n kube-system

kubectl delete svc kube-prometheus-stack-kube-etcd -n kube-system

kubectl delete svc kube-prometheus-stack-kube-proxy -n kube-system

kubectl delete svc kube-prometheus-stack-kube-scheduler -n kube-system

kubectl delete svc kube-prometheus-stack-kubelet -n kube-system

kubectl delete svc prometheus-kube-prometheus-kubelet -n kube-system

kubectl delete MutatingWebhookConfiguration kube-prometheus-stack-admission

kubectl delete ValidatingWebhookConfiguration kube-prometheus-stack-admission