Storage

- You can use the default local on-disk storage, or optionally the remote storage system

- Local storage: a local time series database in a custom Prometheus format

- Remote storage: you can read/write samples to a remote system in a standardized format

- Currently it uses a snappy-compressed protocol buffer encoding over HTTP, but might change in the future (to use gRPC or HTTP/2)

Local Storage

- Prometheus >= 2.0 uses a new storage engine which dramatically increases scalability

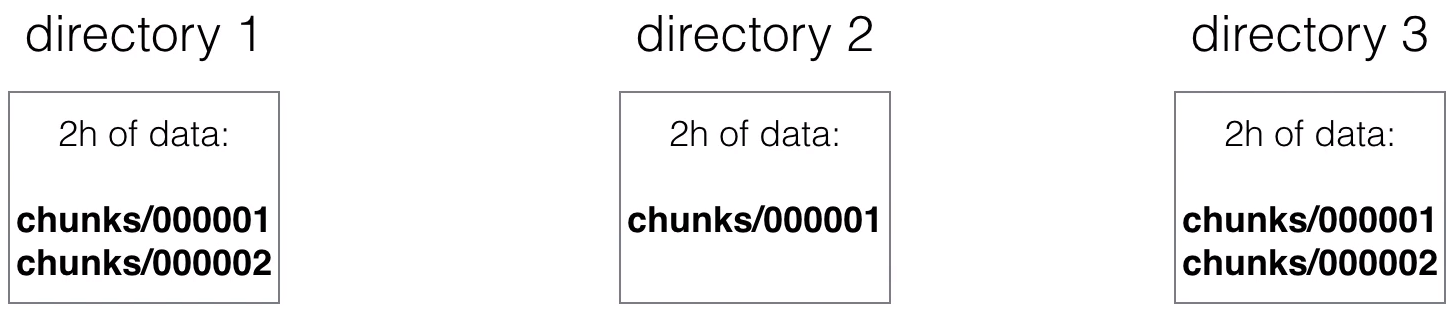

- Ingested samples are grouped in blocks of two hours

- Those 2h samples are stored in separate directories (in the data directory of Prometheus)

- Writes are batched and written to disk in chunks, containing multiple data points

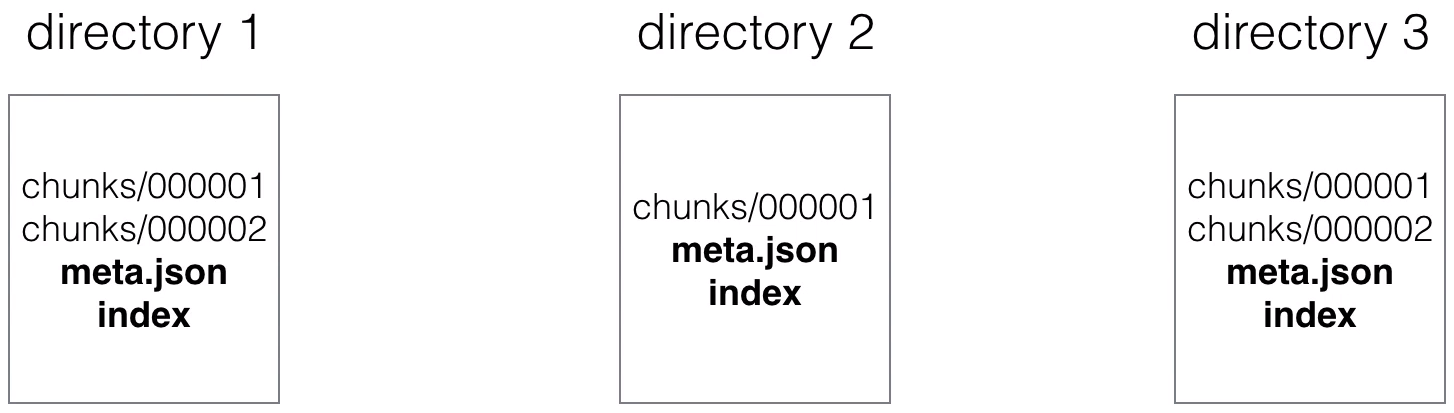

- Every directory also has an index file (index) and a metadata file (meta.json)

- It stores the metric names and the labels, and provides an index form the metric names and labels to the series in the chunk files

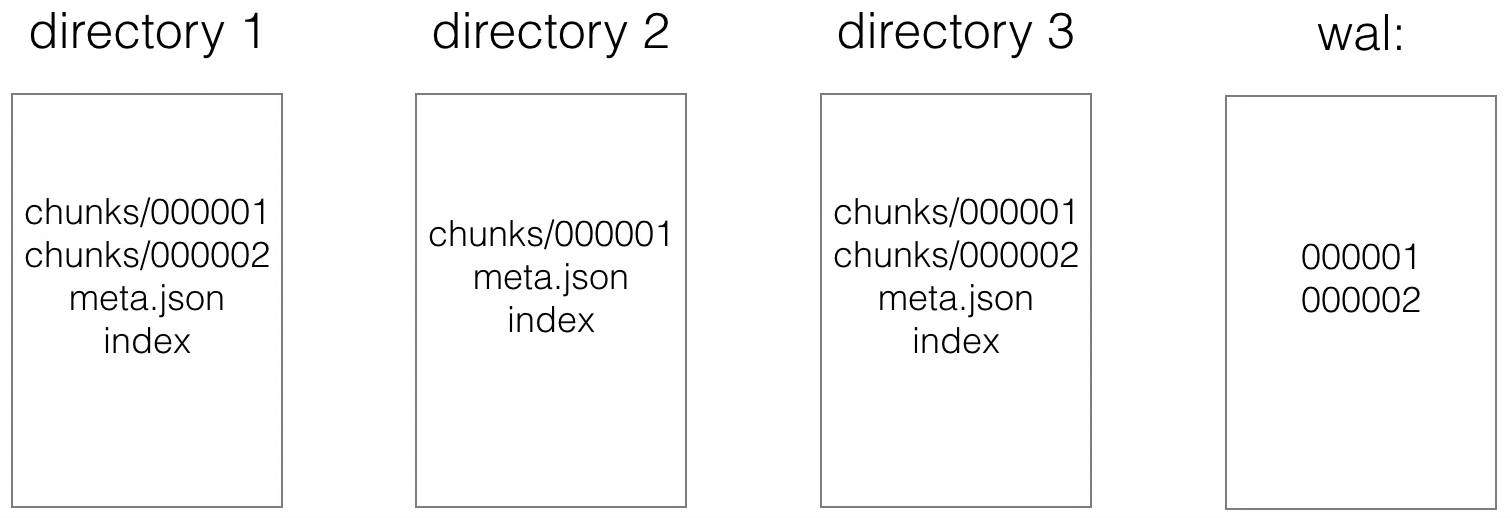

- The most recent data is kept in memory

- You don't want to loose the in-memory data during a crash, so the data also needs to be persisted to disk. This is done using a write-ahead-log (WAL)

- Write Ahead Log (WAL)

- it's quicker to append to a file (like a log) than making(multiple) random read/writes

- If there's a server crash and the data from memory is lost, then the WAL will be replayed

- This way no data will to lost or corrupted during a crash

- When series gets deleted, a tombstone file gets create

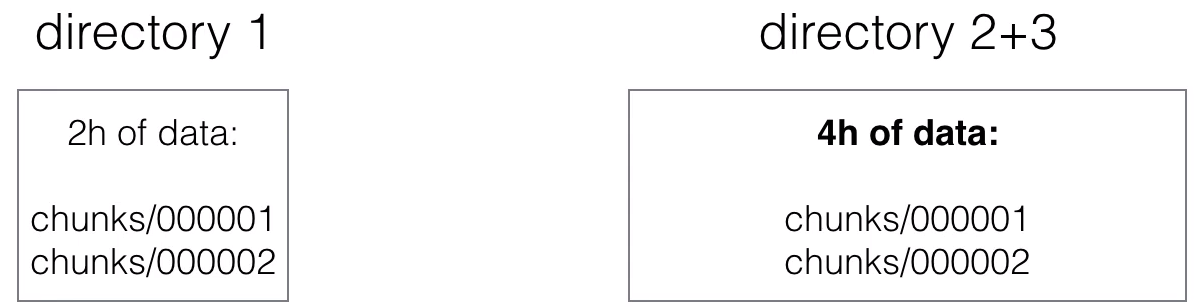

- The initial 2-hour blocks are merged in the background to from longer blocks

- This is called compaction

- The horizontal partitioning gives a lot of benefits:

- When querying, the blocks not in the time range can be skipped

- When completing a block, data only needs to be added, and not modified (avoids write-amplification)

- Recent data is kept in memory, so can be queried quicker

- Deleting old data is only a matter of deleting directories on the filesystem.

- Compaction:

- When querying, blocks have to be merged together to be able to calculate the results

- Too many blocks could cause too much merging overhead, so blocks are compacted

- 2 blocks are merged and form a newly created (often larger) block

- Compaction can also modify data:

- dropping deleted data or restructuring the chunks to increase the query performance

- The index:

- Having horizontal partitioning already makes most queries quicker, but not those that need to go through all the data to get the result

- The index is an inverted index to provide better query performance, also in cases where all data needs to be queried

- Each series is assigned a unique ID (e.g. ID 1,2 and 3)

- The index will contain an inverted index for the labels, for example for label env=production, it'll have 1 and 3 as IDs if those series contain the label env=production

- What about Disk size?

- On average, Prometheus needs 1-2 bytes per sample

- You can use the following formula to calculate the disk space needed:

needed_disk_space = retention_time_seconds * ingested_samples_per_second * bytes_per_sample

- How to reduce disk size?

- You can increase the scrape interval, which will get you less data

- You can decrease the targets or series you scrape

- Or you can can reduce the retention (how long you keep the data)

--storage.tsdb.retention: This determines when to remove old data. Defaults to 15d

Remote Storage

- Remote storage is primarily focused at long term storage

- Currently there are adapters available for the following solutions:

AppOptics: write Graphite: write Chronix: write InfluxDB: read and write Cortex: read and write OpenTSDB: write CreateDB: read and write PostgreSQL/TimescaleDB: read and write Gnocchi: write SignalFx: write Source: https://prometheus.io/docs/operating/integrations/@remote-endpoints-and-storage

References

- To read the full story of Prometheus time series database, read the blog post from Fabian Reinartz at https://fabxc.org/tsdb/