https://medium.com/avmconsulting-blog/how-to-deploy-an-efk-stack-to-kubernetes-ebc1b539d063

01. 개요

- EFK

- Elasticsearch

- Fluentd

- Kibana

- What is Helm

02. 사전 조건

- Kubernetes 1.16+

- Helm 3.x

03. 설치

03-01. Namespace 생성

- EFK Stack을 Deploy할 namespace를 생성합니다.

sansae@win10pro-worksp:/workspaces$ kubectl create ns monitor-efk

03-02. StorageClass 확인

- Elasticsearch의 PersistanceVolume을 사용하기 위하여, StorageClass를 확인 합니다.

- Elasticsearch는 AzureDisk로 Volume을 생성해야 합니다. 따라서 'managed-premium'을 사용할 것입니다.

sansae@win10pro-worksp:/workspaces$ kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE azurefile kubernetes.io/azure-file Delete Immediate true 63d azurefile-premium kubernetes.io/azure-file Delete Immediate true 63d default (default) kubernetes.io/azure-disk Delete Immediate true 63d managed kubernetes.io/azure-disk Delete WaitForFirstConsumer true 28d managed-premium kubernetes.io/azure-disk Delete Immediate true 63d

03-03. Elasticsearch

- Elasticsearch를 Stable버전으로 명세 합니다.

- Volume을 영구 저장하기 위하여 PersistanceVolume를 Azure Disk로 할당 받아야 합니다. 따라서, StorageClassName을 'managed-premium'으로 설정합니다.

sansae@win10pro-worksp:/workspaces$ git clone https://github.com/elastic/helm-charts.git

sansae@win10pro-worksp:/workspaces$ mv helm-charts elastic

sansae@win10pro-worksp:/workspaces$ cd elastic

sansae@win10pro-worksp:/workspaces/elastic$

sansae@win10pro-worksp:/workspaces/elastic$ vi elasticsearch/values.yaml

=========================================================

imageTag: "7.12.0"

----------------------------------------

volumeClaimTemplate:

storageClassName: managed-premium

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 30Gi

=========================================================

sansae@win10pro-worksp:/workspaces/elastic$ helm install elasticsearch/ --generate-name -n monitor-efk

NAME: elasticsearch-1617184828

LAST DEPLOYED: Wed Mar 31 19:00:30 2021

NAMESPACE: monitor-efk

STATUS: deployed

REVISION: 1

NOTES:

1. Watch all cluster members come up.

$ kubectl get pods --namespace=monitor-efk -l app=elasticsearch-master -w

2. Test cluster health using Helm test.

$ helm --namespace=monitor-efk test elasticsearch-1617184828

sansae@win10pro-worksp:/workspaces/elastic$ kubectl get pod -n monitor-efk

NAME READY STATUS RESTARTS AGE

elasticsearch-master-0 1/1 Running 0 4m20s

elasticsearch-master-1 1/1 Running 0 4m20s

elasticsearch-master-2 1/1 Running 0 4m20s

03-03. Fluentd

- Elasticsearch ServiceName 'elasticsearch-master'로 설정합니다.

- Elasticsearch Port: 9200 으로 설정합니다.

sansae@win10pro-worksp:/workspaces$ git clone https://github.com/fluent/helm-charts.git

sansae@win10pro-worksp:/workspaces$ mv helm-charts fluent

sansae@win10pro-worksp:/workspaces$ cd fluent

sansae@win10pro-worksp:/workspaces/fluent$ cd charts

sansae@win10pro-worksp:/workspaces/fluent/charts$ vi fluentd/values.yaml

=========================================================

env:

#- name: "FLUENTD_CONF"

# value: "../../etc/fluent/fluent.conf"

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch-master"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

=========================================================

sansae@win10pro-worksp:/workspaces/fluent/charts$ helm install fluentd --generate-name -n monitor-efk

NAME: fluentd-1617186347

LAST DEPLOYED: Wed Mar 31 19:25:49 2021

NAMESPACE: monitor-efk

STATUS: deployed

REVISION: 1

NOTES:

Get Fluentd build information by running these commands:

export POD_NAME=$(kubectl get pods --namespace monitor-efk -l "app.kubernetes.io/name=fluentd,app.kubernetes.io/instance=fluentd-1617186347" -o jsonpath="{.items[0].metadata.name}")

echo "curl http://127.0.0.1:24231/metrics for Fluentd metrics"

kubectl --namespace monitor-efk port-forward $POD_NAME 24231:24231

sansae@win10pro-worksp:/workspaces/fluent/charts$ kubectl get pod -n monitor-efk

NAME READY STATUS RESTARTS AGE

elasticsearch-master-0 1/1 Running 0 16m

elasticsearch-master-1 1/1 Running 0 16m

elasticsearch-master-2 1/1 Running 0 16m

fluentd-1617190066-9g8n8 1/1 Running 2 12m

fluentd-1617190066-9vnjh 1/1 Running 3 12m

fluentd-1617190066-ccmth 0/1 CrashLoopBackOff 7 12m

fluentd-1617190066-kfr96 1/1 Running 2 12m

fluentd-1617190066-kxtmh 1/1 Running 6 12m

fluentd-1617190066-n4566 1/1 Running 6 12m

fluentd-1617190066-n77b8 1/1 Running 4 12m

fluentd-1617190066-nxxq5 1/1 Running 2 12m

fluentd-1617190066-v4dp9 1/1 Running 2 12m

fluentd-1617190066-x4l6g 1/1 Running 6 12m

03-04. Kibana

sansae@win10pro-worksp:/workspaces$ cd elastic sansae@win10pro-worksp:/workspaces/elastic$ sansae@win10pro-worksp:/workspaces/elastic$ vi kibana/values.yaml ========================================================= imageTag: "7.12.0" ========================================================= sansae@win10pro-worksp:/workspaces/elastic$ helm install kibana/ --generate-name -n monitor-efk NAME: kibana-1617190735 LAST DEPLOYED: Wed Mar 31 20:38:57 2021 NAMESPACE: monitor-efk STATUS: deployed REVISION: 1 TEST SUITE: None sansae@win10pro-worksp:/workspaces/elastic$ kubectl get pod -n monitor-efk NAME READY STATUS RESTARTS AGE elasticsearch-master-0 1/1 Running 0 15h elasticsearch-master-1 1/1 Running 0 15h elasticsearch-master-2 1/1 Running 0 15h fluentd-1617190066-9g8n8 1/1 Running 2 14h fluentd-1617190066-9vnjh 1/1 Running 3 14h fluentd-1617190066-ccmth 1/1 Running 8 14h fluentd-1617190066-kfr96 1/1 Running 2 14h fluentd-1617190066-kxtmh 1/1 Running 6 14h fluentd-1617190066-n4566 1/1 Running 6 14h fluentd-1617190066-n77b8 1/1 Running 4 14h fluentd-1617190066-nxxq5 1/1 Running 2 14h fluentd-1617190066-v4dp9 1/1 Running 2 14h fluentd-1617190066-x4l6g 1/1 Running 6 14h kibana-1617190735-kibana-77f8f5b8c8-zxt8b 1/1 Running 0 14h

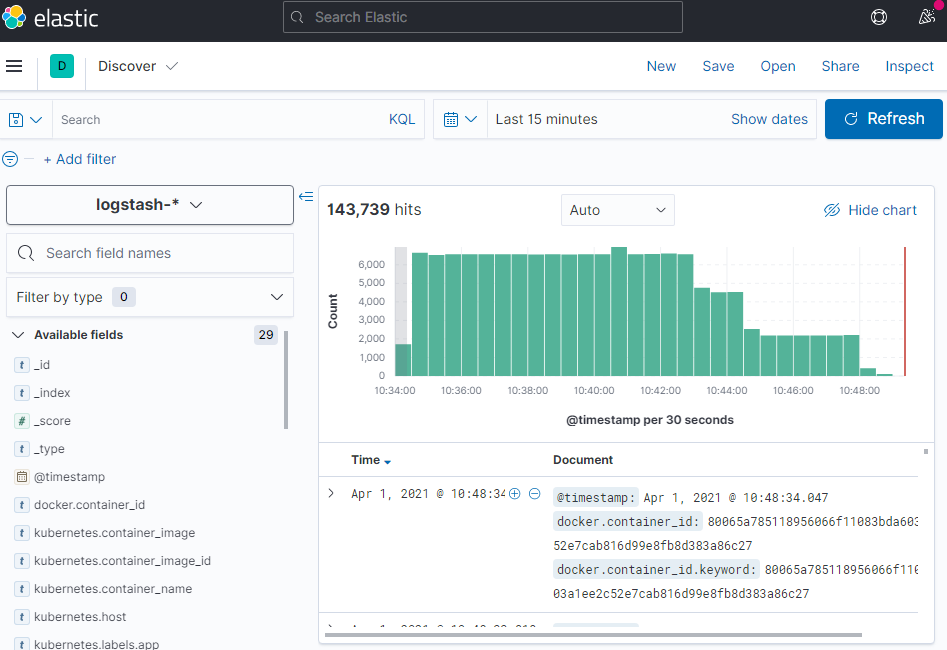

04. Dashboard 확인

sansae@win10pro-worksp:/workspaces/elastic$ kubectl get svc -n monitor-efk NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE elasticsearch-master ClusterIP 10.0.186.68 <none> 9200/TCP,9300/TCP 18m elasticsearch-master-headless ClusterIP None <none> 9200/TCP,9300/TCP 18m fluentd-1617190066 ClusterIP 10.0.173.141 <none> 24231/TCP 14m kibana-1617190735-kibana ClusterIP 10.0.17.173 <none> 5601/TCP 3m4s sansae@win10pro-worksp:/workspaces/elastic$ kubectl port-forward svc/kibana-1617190735-kibana 5601 -n monitor-efk Forwarding from 127.0.0.1:5601 -> 5601 Forwarding from [::1]:5601 -> 5601